Data engineering: The process of designing, building, and maintaining systems for collecting, storing, and analyzing data.

You can begin sharing the files via AWS S3, a Git repository, or use a cloud-based database, such as for example Google BigQuery.

To gain insights, domain teams query, process, and aggregate their analytical data together with relevant data products from other domains.

The federated governance group is normally organized as a guild consisting of representatives of all teams taking part in the data mesh.

They acknowledge global policies, which will be the rules of play in the data mesh.

These rules define how the domain teams need to build their data products.

I’ve had the privilege to create, manage, lead, and foster a sizeable high-performing team of data warehouse & ELT engineers for quite some time.

We built many data warehouses to store and centralize massive levels of data generated from many OLTP sources.

- There are no prerequisites, but Google recommends having some experience with Google Cloud Platform.

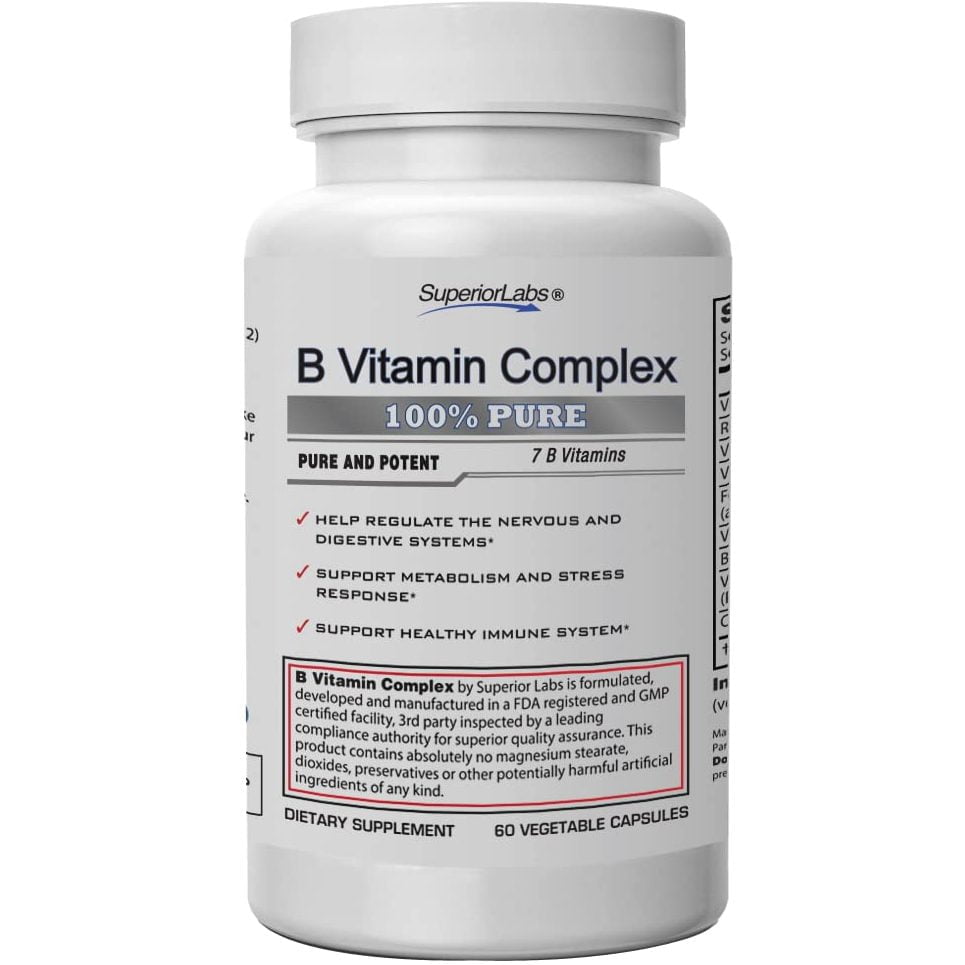

- This calls for using backup and recovery strategies, such as for example disaster recovery plans and data backups, to ensure data can be restored in the event of failing or disaster.

- This means that the need for data engineers reaches an in history high.

- The bigger the company, the more complex the analytics architecture, and the more data the engineer will undoubtedly be responsible for.

Good data stewardship, legal and regulatory requirements dictate that people protect the data owned from unauthorized access and disclosure.

It took me around three years to transform teams of data warehouse and ETL programmers into one cohesive Data Engineering team.

The business quickly realized that they were spending a substantial amount of time administering and managing the machine, instead of performing analytics on the ingested data.

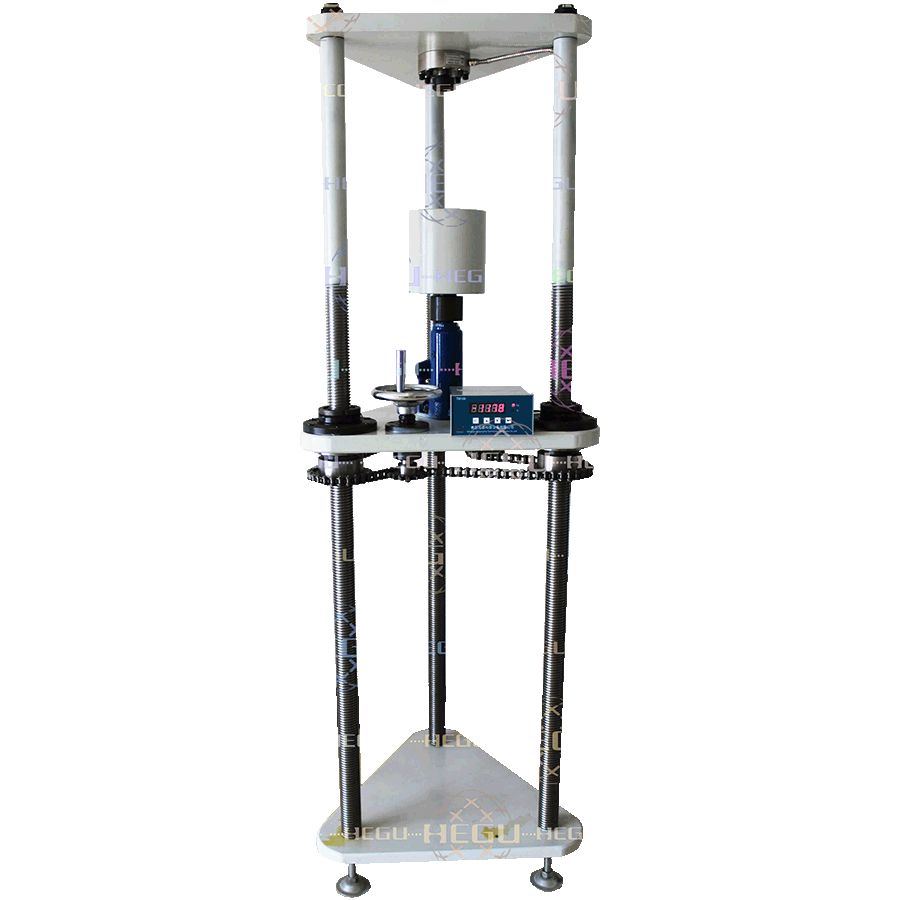

One phData customer is ingesting streaming data from various heavy equipment which are equipped with various sensors.

The organization wished to track these machines, and not only predict when they would want maintenance, but also how they might help customers operate their products better.

To resolve this, the business created a redesigned version for users in those countries, replacing neighborhood links with top travel destinations.

Data engineers also need to ensure that data pipelines flow continuously and keep information updated, utilizing various monitoring tools and site reliability engineering practices.

Learn the skills that you need to go with completing the project.

Project-based learning could be more fun and practical method of learning data engineering.

Data engineers have to acquire a selection of skills related to programming languages, databases, and os’s.

Data-intensive Applications

At SpotHero, he founded and built out their data engineering team, using Airflow as one of the key technologies.

It is also important for data engineers to work well with others.

They will often need to collaborate with data scientists, business analysts, along with other stakeholders.

The data scientists use all that data for analytics and other projects that improve business operations and outcomes.

This program is designed to teach

- Another focus is Lambda architecture, which supports unified data pipelines for batch and real-time processing.

- Data engineers must understand data warehouses and data lakes and how they work.

- requires a solution that can facilitate each one of these operations.

- At the current growth rate it is reasonable to expect the demand and salary of data engineers to keep increasing steadily.

- Trust our data engineering team to design and implement data streaming pipelines that can handle large amounts of data and provide real-time insights, so that you can always stay ahead of the curve.

A shared platform with an individual query language and support for separated areas is a superb solution to start as everything is highly integrated.

This may be Google BigQuery with tables in multiple projects which are discoverable through Google Data Catalog.

Before you understand the role of a data engineering manager, you will need

Product

Big data architecture differs from conventional data handling, as here we’re discussing such massive volumes of rapidly changing information streams a data warehouse isn’t able to accommodate.

The architecture that may handle such an amount of data is a data lake.

Information from DWs is aggregated and loaded in to the OLAP cube where it gets precalculated and is designed for users requests.

Identify and fix issues by analyzing outputs and training data to recognize improvements, implement changes and test outcomes with the broader team.

The book not only discusses the principles of data mesh, but also presents an execution strategy.

In this post, Zhamak Dehghani introduced the idea and core principles of data mesh.

Aggregate domains and consumer-aligned domains can include all foreign data that are relevant because of their consumers’ use cases.

You’re too small and don’t have multiple independent engineering teams.

If you’re the first team, you might have to skip this step for now and move on to level 4 and be the first ever to provide data for others.

Team Collaboration And Communication

It is because of requirements for hardware root access and the need for additional functionality that Windows and Mac OS don’t provide.

Therefore, data engineers will want to get familiar with these operating systems should they haven’t done so already.

They are in charge of creating dashboards for insights and developing machine-learning strategies.

In addition they work directly with decision-makers to understand their information needs and develop approaches for meeting them.

Data engineers build and maintain the info infrastructures that connect an e-commerce organization’s data ecosystems.

Programming- Programming is a fundamental skill for data engineers as most of the tasks performed by data engineers rely on writing programming scripts.

As data is just about the backbone of informed decision-making, having powerful tools and systems to get, store, and analyze data will decide the success of your business endeavours.

At Kulsys, we plan the designing and development procedure for data collection and analysis systems purely predicated on your organization model and customer acquisition goals.

A cloud-based data processing service, Dataflow is targeted at large-scale data ingestion and low-latency processing through fast parallel execution of the analytics pipelines.

Dataflow includes a benefit over Airflow as it supports multiple languages like Java, Python, SQL, and engines like Flink and Spark.

Trending Topic:

Market Research Facilities Near Me

Market Research Facilities Near Me  Tucker Carlson Gypsy Apocalypse

Tucker Carlson Gypsy Apocalypse  sofa

sofa  Cfd Flex Vs Cfd Solver

Cfd Flex Vs Cfd Solver  Mutual Funds With Low Initial Investment

Mutual Funds With Low Initial Investment  Yoy Growth Calculator

Yoy Growth Calculator  Chfa Cfd 2014-1

Chfa Cfd 2014-1  What Were The Best Investments During The Great Depression

What Were The Best Investments During The Great Depression  Beyond Investing: Socially responsible investment firm focusing on firms compliant with vegan and cruelty-free values.

Beyond Investing: Socially responsible investment firm focusing on firms compliant with vegan and cruelty-free values.  Robinhood Snacks: Short daily email newsletter published by investment company Robinhood. It rounds up financial news.

Robinhood Snacks: Short daily email newsletter published by investment company Robinhood. It rounds up financial news.