Decision tree learning: A type of predictive modelling that is used in statistics and maths.

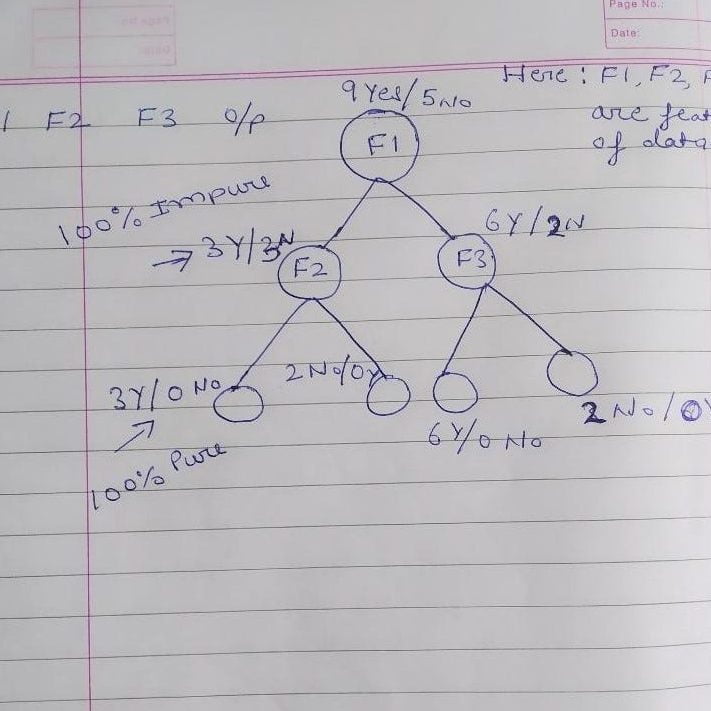

In the below output image, the predicted output and real test output are given. We can clearly see that there are some values in the prediction vector, which are different from the real vector values. Gini index is a measure of impurity or purity used while creating a decision tree in the CART algorithm. According to the value of information gain, we split the node and build the decision tree. The decisions or the test are performed on the basis of features of the given dataset. Astudy conducted in 2009 in Australiatracked a cohort of over 6,000 people and whether or not they had a major depressive disorder over a four-year period. The researchers took inputs like tobacco use, alcohol use, employment status and more to create a decision tree that could be used to predict the risk of a major depressive disorder.

One of the important algorithms is the Decision Tree used for classification and a solution for regression problems. As it is a predictive model, Decision Tree Analysis is done via an algorithmic approach where a data set is split into subsets as per conditions. The name itself says it is a tree-like model in the form of if-then-else statements. The deeper is the tree and more are the nodes, the better is the model. Is random forests, which build an exhaustive group of decision trees . Often, random forests build a more predictive model than either CART or boosted trees. However, decision trees do not require any assumptions of linearity in the data.

Finally, let’s visualize the classification results using ggplot2. First, we will create a new dataset called rf_class with the predicted and actual classifications based on the random forest model. Then, we will visualize the correct and incorrect classifications using a bar chart and a point plot with jittering. The output shows different importance measures for the predictors that we used in the model. MeanDecreaseAccuracy and MeanDecreaseGini represent the overall classification error rate and the total decrease in node impurities from splitting on the variable, averaged over all trees. In the output, math and reading are the two predictors that seem to influence the model performance substantially, whereas EPIST and HEDRES are the least important variables. Furthermore, we need to check the classification accuracy of the estimated decision tree with the test data.

More From Towards Data Science

Consequently, practical decision-tree learning algorithms are based on heuristics such as the greedy algorithm where locally optimal decisions are made at each node. Such algorithms cannot guarantee to return the globally optimal decision tree. To reduce the greedy effect of local optimality, some methods such as the dual information distance tree were proposed. Decision tree learning is a method commonly used in data mining. The goal is to create a model that predicts the value of a target variable based on several input variables. Gradient boosted trees uses an ensemble of decision trees to make predictions. Random forest uses an ensemble of decision trees to make predictions.

- Calculate information gain based on gini impurity and partition of data from previous step.

- Analyzing representative portions of the available information — sampling — can help speed development time on models and enable them to be deployed more quickly.

- Usually, real-world datasets have a large number of features, which will result in a large number of splits, which in turn gives a huge tree.

- This is because such a model is not a generalized one and it won’t be able to predict well on new and unseen examples.

Next, we will tune the parameters of the decision tree to increase its accuracy. One of those parameters is ‘min_samples_split’, which is the minimum number of samples required to split an internal node. Its default value is equal to 2 because we cannot split on a node containing only one example/ sample. Since, this is a classification problem, we will import the DecisionTreeClassifier function from the sklearn library. Next, we will set the ‘criterion’ to ‘entropy’, which sets the measure for splitting the attribute to information gain.

Benefits Of Predictive Modeling

We can increase the order of the model, but it requires more data to obtain good probability estimation. In practice, it is rare to create a Markov model with an order higher than 5 or 6. A solution to this is to use recurrent neural networks or transformer networks , which can also learn to generate sequences in an autoregressive way but can remember things further in the past. Such a model is not often used in machine learning, but it is heavily used in other areas, such as signal processing. The resulting model is better than with the default covariance and has good extrapolation abilities, which is attractive for modeling time series. The possibility of creating such structured covariances broadens the capability of the Gaussian process method and allows us to bring knowledge that we might have about a particular problem.

- ID3 is harder to use on continuous data than on factored data .

- You may tune other parameters of the decision tree and check how they affect the decision boundary in a similar way.

- Order to placing attributes as root or internal node of the tree is done by using some statistical approach.

- Unsupervised models use traditional statistics to classify the data directly, using techniques like logistic regression, time series analysis and decision trees.

Now you must be thinking how do I know what should be the root node? To decide this, there is a metric called “Entropy” which is the amount of uncertainty in the dataset. The process of growing a decision tree is computationally expensive. At each node, each candidate splitting field must be sorted before its best split can be found. In some algorithms, combinations of fields are used and a search must be made for optimal combining weights. Pruning algorithms can also be expensive since many candidate sub-trees must be formed and compared. In terms of disadvantages, trees do not usually have the same level of predictive accuracy of other methods, such as regression.

One strategy to achieve this is to grow a very large tree and then prune it back in order to obtain a subtree. Decision trees, or classification trees and regression trees, predict responses to data.

The candidate with the maximum value will split the root node, and the process will continue for each impure node until the tree is complete. Performs multi-level splits when computing classification trees. Training a logistic regression classifier to predict people’s gender based on their weight and height. Their downside comes from their small memory of what happened in the past of the sequence.

Example, consider a coin toss whose probability of heads is 0.5 and probability of tails is 0.5. Here the entropy is the highest possible, since there’s no way of determining what the outcome might be. Alternatively, consider a coin which has heads on both the sides, the entropy of such an event can be predicted perfectly since we know beforehand that it’ll always be heads. In other words, this event has no randomness hence it’s entropy is zero. In particular, lower values imply less uncertainty while higher values imply high uncertainty. Introduction Decision Trees are a type of Supervised Machine Learning where the data is continuously split according to a certain parameter. The tree can be explained by two entities, namely decision nodes and leaves.

Trending Topic:

Market Research Facilities Near Me

Market Research Facilities Near Me  Cfd Flex Vs Cfd Solver

Cfd Flex Vs Cfd Solver  Tucker Carlson Gypsy Apocalypse

Tucker Carlson Gypsy Apocalypse  CNBC Pre Market Futures

CNBC Pre Market Futures  Best Gdp Episode

Best Gdp Episode  Stock market index: Tracker of change in the overall value of a stock market. They can be invested in via index funds.

Stock market index: Tracker of change in the overall value of a stock market. They can be invested in via index funds.  PlushCare: Virtual healthcare platform. Physical and mental health appointments are conducted over smartphone.

PlushCare: Virtual healthcare platform. Physical and mental health appointments are conducted over smartphone.  Mutual Funds With Low Initial Investment

Mutual Funds With Low Initial Investment  Jeff Gural Net Worth

Jeff Gural Net Worth  Robinhood Snacks: Short daily email newsletter published by investment company Robinhood. It rounds up financial news.

Robinhood Snacks: Short daily email newsletter published by investment company Robinhood. It rounds up financial news.