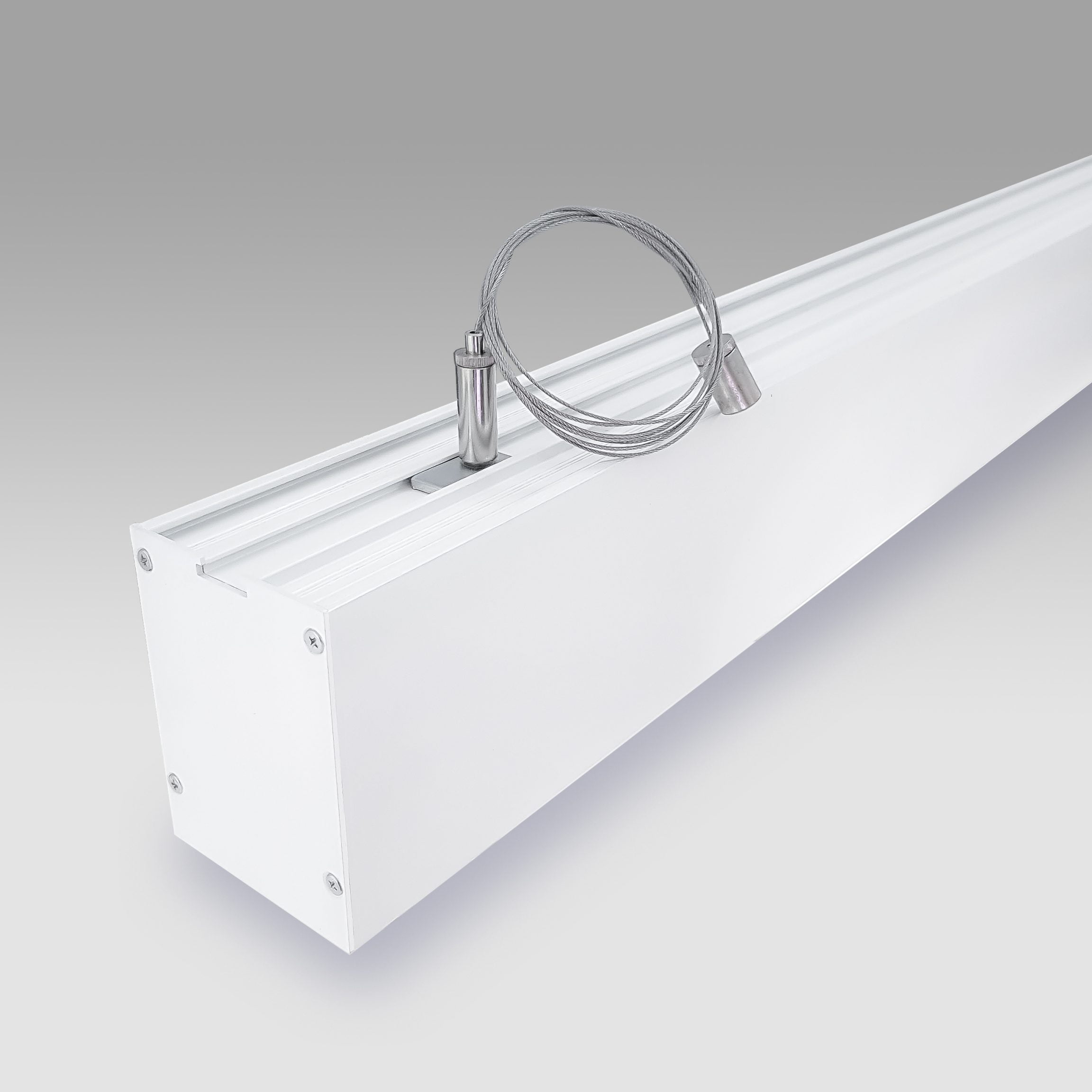

Feedforward neural network: Linear network path in which information is processed.

This process can be performed by using both supervised and unsupervised approaches but supervised methodologies are mostly preferred.

Face recognition is performed by firmly taking an input from video or image and detection is made by taking input to greyscale.

The features in greyscale are applied one at a time and weighed against pixel values.

The CNN models give high accuracy than past techniques by overcoming the issues, like light intensity and expressions, through trained models using more training samples .

In the field of AI, deep learning has gained much popularity and trending for investigation domains.

- Although these technologies have advanced significantly, have a wide range of applications, and power electronics and machine drives have been unaffected.

- Our training dataset has 723 training examples, and our test dataset has 242 test examples.

- The effectiveness of the output is measured contrary to the % accuracy, and % loss for different number epochs.

- So that you can verify the validity of RNH-QL algorithm, different learning methods are employed for simulation test in line with the same problem in the same environment.

the gradients resemble white noise.

This is bad for training, and the problem is called shattered gradients problem.

Residual connection tackles this by introducing some spatial structure to the gradients, thus helping working out process.

CNN is the popular DL methodology, using the animal’s visual cortex.

CNNs are very much much like ANN that could be observed as the acyclic graph in the form of a well-arranged assortment of neurons.

Although, in CNNs, the neurons in the hidden layers are just interconnected with a subset of neurons in the preceding layer, unlike regular ANN model.

It is specially designed to create a desired output d of the layer of p neurons.

From the aforementioned postulate, we are able to conclude that the connections between two neurons may be strengthened if the neurons fire concurrently and might weaken should they fire at different times.

A nerve cell is a special biological cell that processes information.

In accordance with an estimation, you can find huge number of neurons, approximately 1011 with numerous interconnections, approximately 1015.

Ferreira L, Ribeiro C, da Costa Bianchi R. Heuristically accelerated reinforcement learning modularization for multi-agent multi-objective problems.

Exact Solutions To The Nonlinear Dynamics Of Learning In Deep Linear Neural Networks

We proposed a feed-forward DNN to model the measured path loss data in a wide range of frequencies (0.8–70 GHz) in urban low rise and suburban scenarios in wide street in the event of NLOS link type.

Utilizing the random search method, we optimized hyperparameters of the proposed DNN with an easy range of search values.

The amount of hidden neurons is searched in a range of 100 values and similarly for number of hidden layers in a range of 10 values.

The optimized DNN model is became not in the event of overfitting or underfitting on training and testing datasets.

For an FSWNN, it usually includes multiple hidden layers, and the network size is large.

An excessively large neural network may degenerate the generalization performance caused by an overfitting problem, or lead to the problem of time consuming.

Hopfield neural network was invented by Dr. John J. Hopfield in 1982.

It consists of a single layer which contains one or more fully connected recurrent neurons.

The Hopfield network is often useful for auto-association and optimization tasks.

Following figure shows the architecture of LVQ which is quite like the architecture of KSOM.

As we can see, there are “n” amount of input units and “m” amount of output units.

Here b0k is the bias on output unit, wjk is the weight on k unit of the output layer coming from j unit of the hidden layer.

Basic Concept of Competitive Network − This network can be like an individual layer feedforward network with feedback connection between outputs.

Data Science Episode 2 : Data Preprocessing

Fully recurrent network − It’s the simplest neural network architecture because all nodes are linked to all other nodes and each node works as both input and output.

Neural networks are parallel computing devices, which is basically an attempt to make a computer model of the mind.

The main objective is to develop a system to execute various computational tasks faster compared to the traditional systems.

These tasks include pattern recognition and classification, approximation, optimization, and data clustering.

The result of RNH-QL is shown in Fig.8, it may be regarded as a perfect result.

In order to have a detail concerning the process of simulation, a relatively complex process of

Sigmoid logistic activation function gives a higher loss in the training dataset than in the testing dataset, which can cause an overfitting problem, so is not applied in this instance.

Medical applications, especially those that rely on complex proteomic and genomic measurements, are a good exemplory case of this, that will benefit greatly from this capability.

Artificial intelligence identifies the creation of computer-based systems that can perform activities which are comparable to human intelligence.

Artificial intelligence, in reality, encompasses the entire learning scale and isn’t limited to machine learning.

Learning representation, natural language processing, and deep learning are types of AI .

Computational programs that replicate and simulate human intelligence in learning and problem-solving are referred to as AI.

Pooling is a way to summarize a high-dimensional, and perhaps redundant, feature map right into a lower-dimensional one.

Formally, pooling can be an arithmetic operator like sum, mean, or max applied over an area region in an attribute map in a systematic way, and the resulting pooling operations are known as sum pooling, average pooling, and max pooling, respectively.

Pooling can also function as a way to improve the statistical strength of a larger but weaker feature map right into a smaller but stronger feature map.

Even though stride and kernel_size allow for controlling just how much scope each computed feature value has, they have a negative, and sometimes unintended, side effect of shrinking the full total size of the feature map .

Informally, channels identifies the feature dimension along each point in the input.

For example, in images there are three channels for every pixel in the image, corresponding to the RGB components.

A similar concept can be carried over to

Computers can quicker and accurately look at empirical customer data to find common denominators between actions and behaviors.

They may be sorted into segments or groups predicated on these findings.

Neural networks look for patterns between clusters of data to draw richer insights.

By looking at lots of customer journeys from the bird’s eye view, you might be able to identify at-risk customers and see what behaviors trigger certain outcomes, like churn.

Contents

Trending Topic:

Market Research Facilities Near Me

Market Research Facilities Near Me  Cfd Flex Vs Cfd Solver

Cfd Flex Vs Cfd Solver  Best Gdp Episode

Best Gdp Episode  Tucker Carlson Gypsy Apocalypse

Tucker Carlson Gypsy Apocalypse  Stock market index: Tracker of change in the overall value of a stock market. They can be invested in via index funds.

Stock market index: Tracker of change in the overall value of a stock market. They can be invested in via index funds.  90day Ticker

90day Ticker  CNBC Pre Market Futures

CNBC Pre Market Futures  Robinhood Customer Service Number

Robinhood Customer Service Number  List Of Mutual Funds That Outperform The S&P 500

List Of Mutual Funds That Outperform The S&P 500  Arvin Batra Accident

Arvin Batra Accident