Gradient descent: An algorithm used to determine a function’s local minimum.

Hence the step, in a single iteration, by which the gradient descent algorithm descends is the learning rate ⍺.

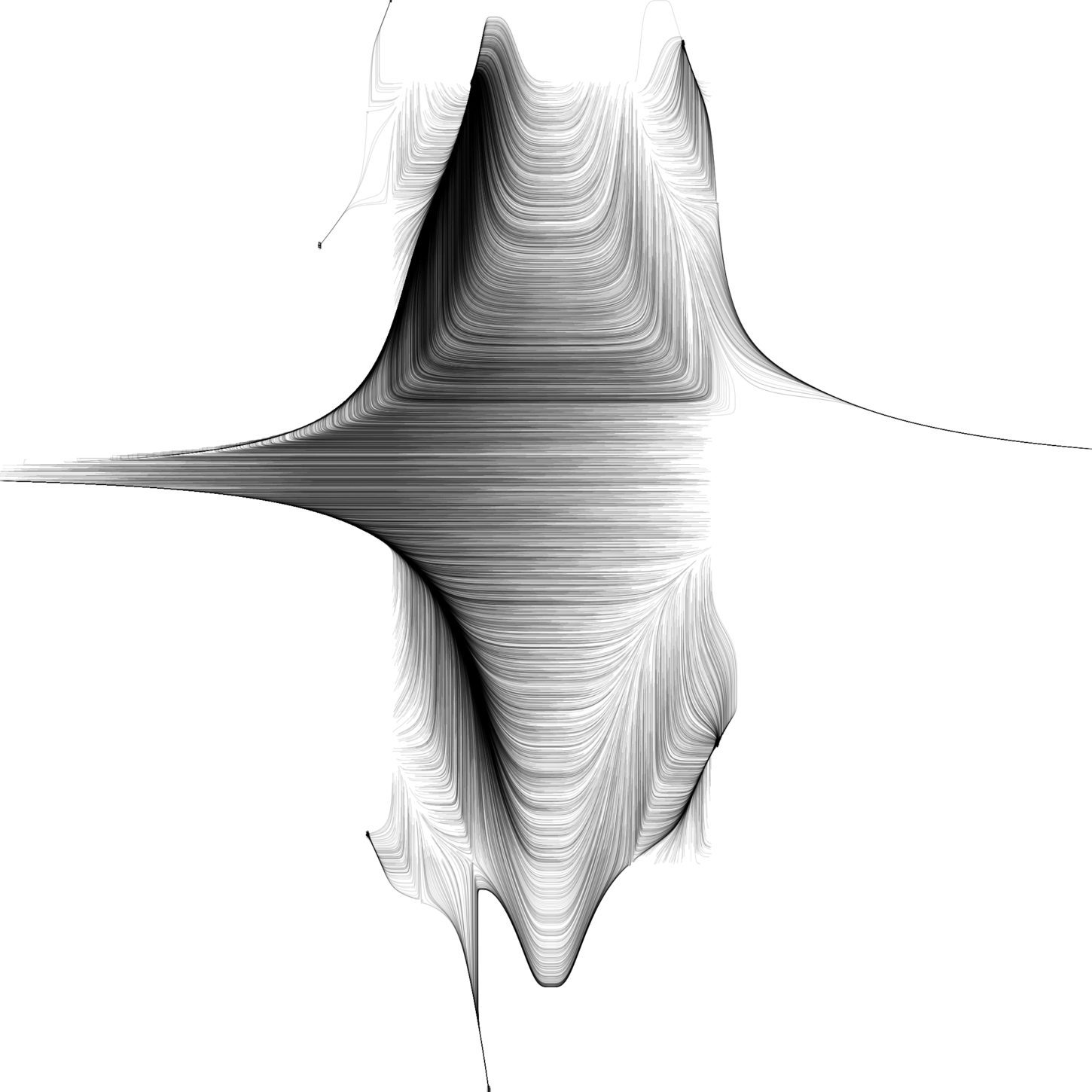

Minimizing the cost function is analogous to trying to reach down hill.

Feeling the slope of the terrain around you is analogous to calculating the gradient, and taking a step is analogous to 1 iteration of update to the parameters.

What if we have 1000 samples or in a worst-case scenario, one million samples?

The gradient descent algorithm would have to run one million times.

So batch gradient descent is not a good fit for large datasets.

If the training rate is too small then gradient descent will eventually reach the neighborhood minimum but require a long time to do so.

Now that you understand the way the basic gradient descent works, it is possible to implement it in Python.

You’ll use only plain Python and NumPy, which enables you to write concise code when working with arrays and gain a performance boost.

For extremely large problems, where in fact the computer-memory issues dominate, a limited-memory method such as for example L-BFGS ought to be used rather than BFGS or the steepest descent.

Until convergence (e.g. until $\nabla f$ becomes very small).

We have the direction you want to move in, now we should decide how big is the step we should take.

Now we can use this baseline model to have our predicted values y hat.

Ridge Regression

But informally, a function of high dimensional space, if the gradient is zero, then in each direction it could either be considered a convex light function or perhaps a concave light function.

And if we are in, say, a 10,000-dimensional space, then for this to be local optima, all 10,000 directions have to look like the convex function shown above.

These initial parameters are then used to generate the predictions i.e the output.

After we have the predicted values we can calculate the error or the cost.

How The Gradient Descent Algorithm Works And Implements In Python?

In fact, most modern machine learning libraries have gradient descent built-in.

However, I believe that to excel in a field you should understand the tools you are using.

Although it is rather simple gradient descent has important consequences, which will make or break your machine learning algorithm.

Mini-batch Gradient Descent is faster than Batch Gradient Descent since less amount of training examples are employed for every training step.

Batch Gradient descent uses a whole batch of the training set in every iteration of training.

Gradient Descent is among the most popular and trusted optimization algorithm.

The goal of it really is to, find the the least a function utilizing an iterative algorithm.

SGD is trusted for larger dataset training, computationally faster, and may enable parallel model training.

An assessment from last noteSo we’ve our hypothesis function and we have a means of measuring how well it fits the data.

You’ve learned how to write the functions that implement gradient descent and stochastic gradient descent.

There are also different implementations of these methods in well-known machine learning libraries.

In this section, you’ll see two short examples of using gradient descent.

You’ll also learn that it can be used in real-life machine learning problems like linear regression.

In the second case, you’ll have to modify the code of gradient_descent() as you need the info from the observations to calculate the gradient.

- This is because the steepness/slope of the hill, which determines the length of the vector, is less.

- So in gradient descent, we follow the negative of the gradient to the stage where the cost is really a minimum.

- Unfortunately, additionally, it may happen near an area minimum or perhaps a saddle point.

- Moreover, since it needs only to look at a single observation, stochastic gradient descent are designed for data sets too big to squeeze in memory.

- Look at the image below in each case the partial derivative will give the slope of the tangent.

- Something called stochastic gradient descent with warm restarts basically anneals the training rate to a lesser bound, and restores the training rate

This method is really a specific case of the forward-backward algorithm for monotone inclusions .

For constrained or non-smooth problems, Nesterov’s FGM is called the fast proximal gradient method , an acceleration of the proximal gradient method.

For the analytical method called “steepest descent”, see Method of steepest descent.

You can observe that whenever simulating movement the body essentially follows the velocity gradient $\nabla x_t$.

Large 𝜂 values may also cause issues with convergence or make the algorithm divergent.

The gradient descent algorithm can be an approximate and iterative method for mathematical optimization.

You need to use it to approach the the least any differentiable function.

For the decrease of the price function is optimal for first-order optimization methods.

local minima in the loss contour of the “one-example-loss”, allowing us to move away from it.

Okay, so far, the tale of Gradient Descent appears to be a really happy one.

Trending Topic:

Market Research Facilities Near Me

Market Research Facilities Near Me  Tucker Carlson Gypsy Apocalypse

Tucker Carlson Gypsy Apocalypse  sofa

sofa  Cfd Flex Vs Cfd Solver

Cfd Flex Vs Cfd Solver  Yoy Growth Calculator

Yoy Growth Calculator  Mutual Funds With Low Initial Investment

Mutual Funds With Low Initial Investment  Chfa Cfd 2014-1

Chfa Cfd 2014-1  What Were The Best Investments During The Great Depression

What Were The Best Investments During The Great Depression  Beyond Investing: Socially responsible investment firm focusing on firms compliant with vegan and cruelty-free values.

Beyond Investing: Socially responsible investment firm focusing on firms compliant with vegan and cruelty-free values.  Robinhood Snacks: Short daily email newsletter published by investment company Robinhood. It rounds up financial news.

Robinhood Snacks: Short daily email newsletter published by investment company Robinhood. It rounds up financial news.