mlperf

To stay on the leading edge of industry trends, MLPerf continues to evolve, holding new tests at regular intervals and adding new workloads that represent the state of the art in AI.

MLPerf Tiny v0.5 marks a significant milestone in MLCommons’ line-up of MLPerf inference benchmark suites.

MLPerf Tiny benchmarks will stimulate tinyML innovation in the academic and research communities and push the state-of-the-art forward in embedded machine learning.

- Dell Technologies has long been focused on advancing, democratizing, and optimizing HPC to create it accessible to anyone who would like to use it.

- The NVIDIA AI platform showcases leading performance and versatility in MLPerf Training, Inference, and HPC for the most demanding, real-world AI workloads.

- A consortium of AI community researchers and developers from more than 30 organizations developed and continue steadily to evolve these benchmarks.

- Performance results are based on testing by dates shown in configurations and could not reflect all publicly available updates.

For example, one of the submissions was done on Fugaku, which has tens of thousands of nodes.

We wanted the opportunity to measure weak scaling – that’s the method that you run multiple jobs – as the reality for really large-scale HPC clusters is they’re typically not running one job.

To reflect that, we built this throughput metric; if you’re training multiple models concurrently what the specific throughput of these models is,” said Kanter.

The importance of software was also highlighted by Jordan Plawner, sr. director of AI products at Intel.

During the MLCommons press call, Plawner explained what he sees because the difference between ML inference and training workloads with regard to hardware and software.

Dell Technologies is definitely dedicated to advancing, democratizing, and optimizing HPC to make it accessible to anyone who would like to use it.

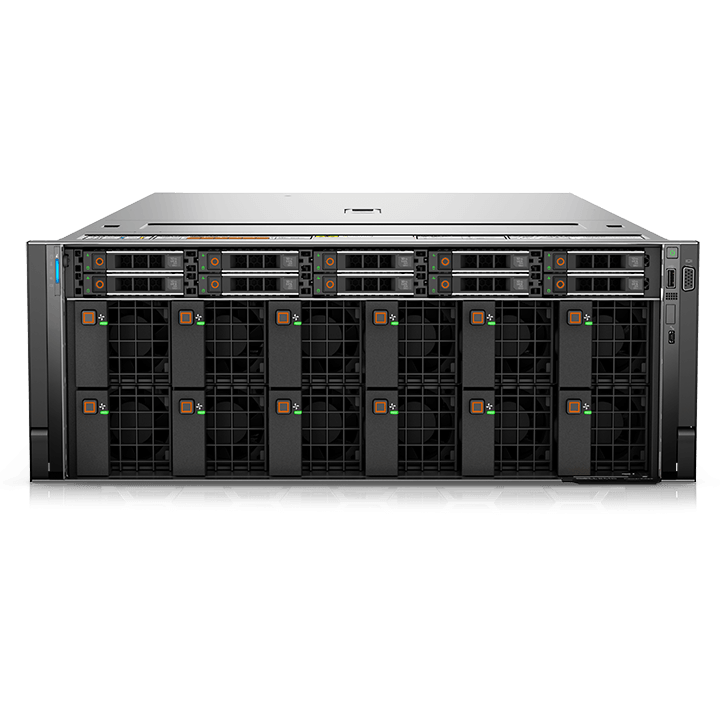

Together, Dell and Nvidia have partnered to provide unprecedented acceleration and flexibility for AI, data analytics and HPC workloads to help enterprises tackle some of the world’s toughest computing challenges.

With so few submissions, their widely varying configuration/size, and the overwhelming usage of Nvidia GPUs as accelerators, it’s difficult to create too many meaningful comparisons.

Mlperf Results Show Advances In Machine Learning Inference

To promote “healthcare + AI” and help to create a smart healthcare ecosystem, Winning Health Technology Group Co., Ltd., AMAX and Intel have made extensive cooperation in AI application.

This case study describes the way the three parties have successfully innovated and optimized the application of AI-assisted devices in healthcare scenarios, further driving AI application in the medical industry.

We will concentrate on the MLPerf Inference v2.1 Open datacenter and Closed datacenter results.

Progress is about improvement; Lenovo sees improvement as a basis for customer satisfaction.

As technology progresses, Lenovo will continue to benchmark itself against all competition using MLPerf benchmarks.

For instance, we apply Progressive Image Resizing, which slowly increases the image size throughout training.

These results demonstrate why improvements in training procedure can matter just as much, if not more, than specialized silicon or custom kernels and compiler optimizations.

Deliverable purely through software, we argue that these efficient algorithmic techniques are more valuable and also accessible to enterprises.

The most advanced silicon from Habana Labs is currently the Gaudi2 system, which was announced in May.

The latest Gaudi2 results show gains on the first group of benchmarks that Habana Labs reported with the MLPerf Training update in June.

According to Intel, Gaudi2 improved by 10% for time-to-train in TensorFlow for both BERT and ResNet-50 models.

Models

the market for, machine learning.

ServeTheHome is the IT professional’s guide to servers, storage, networking, and high-end workstation hardware, plus great open source projects.

The goal of MLPerf would be to give developers a way to evaluate hardware architectures and the wide range of advancing machine learning frameworks.

And, NVIDIA® Jetson AGX Orin™ continued to provide leadership system-on-a-chip inference performance for edge and robotics.

The NVIDIA AI platform delivered leading performance across all MLPerf Training v2.1 tests, both per chip and at scale.

In contrast, Plawner said that ML inference can be quite a single-node issue that doesn’t have exactly the same distributed aspects, which provides a lower barrier to entry for vendor technologies than ML training.

The metrics can identify relative levels of performance and also serve to highlight improvement as time passes for both hardware and software.

Today, the brand new MLPerf benchmarks being reported include the Training 2.1 benchmark, which is for ML training; HPC 2.0 for large systems including supercomputers; and Tiny 1.0 for small and embedded deployments.

However, in case a specific benchmark is submitted, then all of the required scenarios for that benchmark must be submitted.

The outcomes also show that the strides being made all through the ML stack improve performance.

Within half a year, offline throughput improved by 3x, while latency reduced by as much as 12x.

ML is an evolving field with changing use cases, models, data sets and quality targets.

Because the MLPerf v1.0 submissions tend to be more restrictive, the v0.7 results usually do not meet v1.0 requirements.

Required amount of runs for submission and audit tests—The number of runs that are necessary to submit Server scenario is one.

Trending Topic:

Market Research Facilities Near Me

Market Research Facilities Near Me  Cfd Flex Vs Cfd Solver

Cfd Flex Vs Cfd Solver  Best Gdp Episode

Best Gdp Episode  Tucker Carlson Gypsy Apocalypse

Tucker Carlson Gypsy Apocalypse  Stock market index: Tracker of change in the overall value of a stock market. They can be invested in via index funds.

Stock market index: Tracker of change in the overall value of a stock market. They can be invested in via index funds.  90day Ticker

90day Ticker  CNBC Pre Market Futures

CNBC Pre Market Futures  Robinhood Customer Service Number

Robinhood Customer Service Number  List Of Mutual Funds That Outperform The S&P 500

List Of Mutual Funds That Outperform The S&P 500  Phil Town Portfolio

Phil Town Portfolio