VSeeFace: Face and hand tracking software that turns video input into animated avatar output. Used by “virtual YouTubers.”

This article was co-authored by wikiHow staff writer, Hannah Madden.

Hannah Madden is really a writer, editor, and artist currently living in Portland, Oregon.

In 2018, she graduated from Portland State University with a B.S.

Hannah enjoys writing articles about conservation, sustainability, and eco-friendly products.

When she isn’t writing, you can find Hannah focusing on hand embroidery projects and hearing music.

In order to customize your model, try using programs like Blender, Daz3D, or Pixiv.

an already create expression, press the corresponding Clear button and Calibrate.

While running, many lines showing something like Took 20ms in the beginning should appear.

While a face is in the view of the camera, lines with Confidence should appear too.

A second window should show the camera view and red and yellow tracking points overlaid on the face.

If this isn’t the case, something is wrong with this side of the process.

So far as resolution can be involved, the sweet spot is 720p to 1080p.

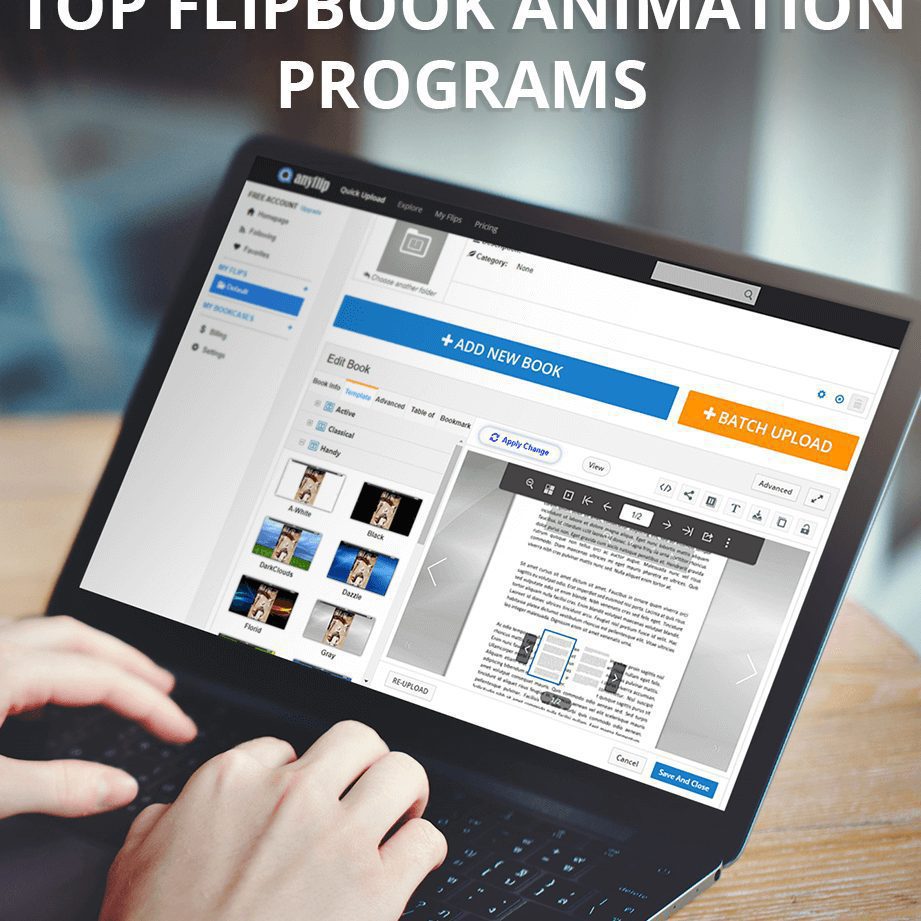

Vseeface

This requires an especially prepared avatar containing the required blendshapes.

You can find an example avatar containing the necessary blendshapes here.

An easy, however, not free, solution to apply these blendshapes to VRoid avatars is to use HANA Tool.

It is also possible to utilize VSeeFace with iFacialMocap through iFacialMocap2VMC.

If your model uses ARKit blendshapes to regulate the eyes, set the gaze strength slider to zero, otherwise, both bone based eye movement and ARKit blendshape based gaze may get applied.

Track face features will apply blendshapes, eye bone and jaw bone rotations according to VSeeFace’s tracking.

If only Track fingers and Track hands to shoulders are enabled, the Leap Motion tracking will undoubtedly be applied, but camera tracking will stay disabled.

If the additional options are enabled, camera based tracking will undoubtedly be enabled and the selected parts of it will be applied to the avatar.

In my experience, the existing webcam based hand tracking don’t work well enough to warrant spending enough time to integrate them.

Osc/vmc Protocol Support

Because the virtual camera keeps running even while the UI is shown, using it instead of a game capture can be useful in the event that you often make changes to settings throughout a stream.

The virtual camera supports loading background images, that can be useful for vtuber collabs over discord calls, by setting a unicolored background.

Try setting the camera settings on the VSeeFace starting screen to default settings.

The selection will undoubtedly be marked in red, nevertheless, you can ignore that and press start anyways.

Following the first export, you will need to put the VRM file back into your Unity project to actually create the VRM blend shape clips along with other things.

This process is really a bit advanced and requires some general knowledge about the usage of commandline programs and batch files.

To get this done, copy either the whole VSeeFace folder or the VSeeFace_Data\StreamingAssets\Binary\ folder to the second PC, which should have the camera attached.

Running this file will open first require some information to create the camera and then run the tracker process that’s usually run in the background of VSeeFace.

If you entered the right information, it will show an image of the camera feed with overlaid tracking points, so usually do not run it while streaming your desktop.

- It supports sound capture, making the lip synchro.

- You can also use the Vita model to test this, which is recognized to have a working eye setup.

- If the additional options are enabled, camera based tracking will undoubtedly be enabled and the selected parts of it will be put on the avatar.

- To remove

- To make use of these parameters, the avatar needs to be specifically set up for this.

- If you just want to be popular enough to sell models and make a straightforward living, well, you can find people who do this already.

“VSeeFace is a free, highly configurable face and hand tracking VRM avatar puppeteering program for virtual youtubers with a concentrate on robust tracking and high image quality.

VSeeFace can send, receive and combine tracking data using theVMC protocol, which also allows iPhoneperfect syncsupport throughWaidayolikethis.” .

Adobe Character Animator is really a 2D cartoon performance recording approach.

I mention it as an example of video capture controlling section of a puppet .

There are sometimes problems with blend shapes not being exported correctly by UniVRM.

Vseeface Tutorials

Also start to see the model issues section for additional information on things to consider.

One of my own challenges is how exactly to create 3D animated quite happy with relatively low effort and decent quality.

I have been accumulating a library of animation clips in Unity that i stitch right into a sequence to create up a scene, but it is proving frustrating.

Another approach is to use performance-based recordings, where you perform the action live and record it.

This usually provides a reasonable starting point that you could adjust further to your preferences.

Afterwards, run the Install.bat in the same folder as administrator.

Sometimes they lock onto some object in the background, which vaguely resembles a face.

After loading the project in Unity, load the provided scene inside the Scenes folder.

Facerig also has real-time facial capture technology, and a person with a webcam can digitally embody an outstanding character.

It aims to be an open creation platform, so everyone could make their very own characters.

In addition, if you want to add decorations to the model, also you can add decorations to perform .

Conventional live streaming on Twitch or YouTube involves several technologies such as a webcam, economical microphone, a broadcasting software, and you are all set.

Trending Topic:

Market Research Facilities Near Me

Market Research Facilities Near Me  Cfd Flex Vs Cfd Solver

Cfd Flex Vs Cfd Solver  Best Gdp Episode

Best Gdp Episode  Tucker Carlson Gypsy Apocalypse

Tucker Carlson Gypsy Apocalypse  Stock market index: Tracker of change in the overall value of a stock market. They can be invested in via index funds.

Stock market index: Tracker of change in the overall value of a stock market. They can be invested in via index funds.  Robinhood Customer Service Number

Robinhood Customer Service Number  90day Ticker

90day Ticker  CNBC Pre Market Futures

CNBC Pre Market Futures  List Of Mutual Funds That Outperform The S&P 500

List Of Mutual Funds That Outperform The S&P 500  Phil Town Portfolio

Phil Town Portfolio